AI: Risk or Opportunity?

It’s difficult to avoid the noise currently surrounding generative AI. Based on many recent conversations, this is an issue that needs to be approached with some care. Certainly, from the security and resilience perspective, we need to think about the impact of these solutions and how we can provide a useful framework for security, privacy and governance in relation to AI-driven apps. Put simply, how do we make sure we’re managing the potential risks while capitalizing on any opportunities?

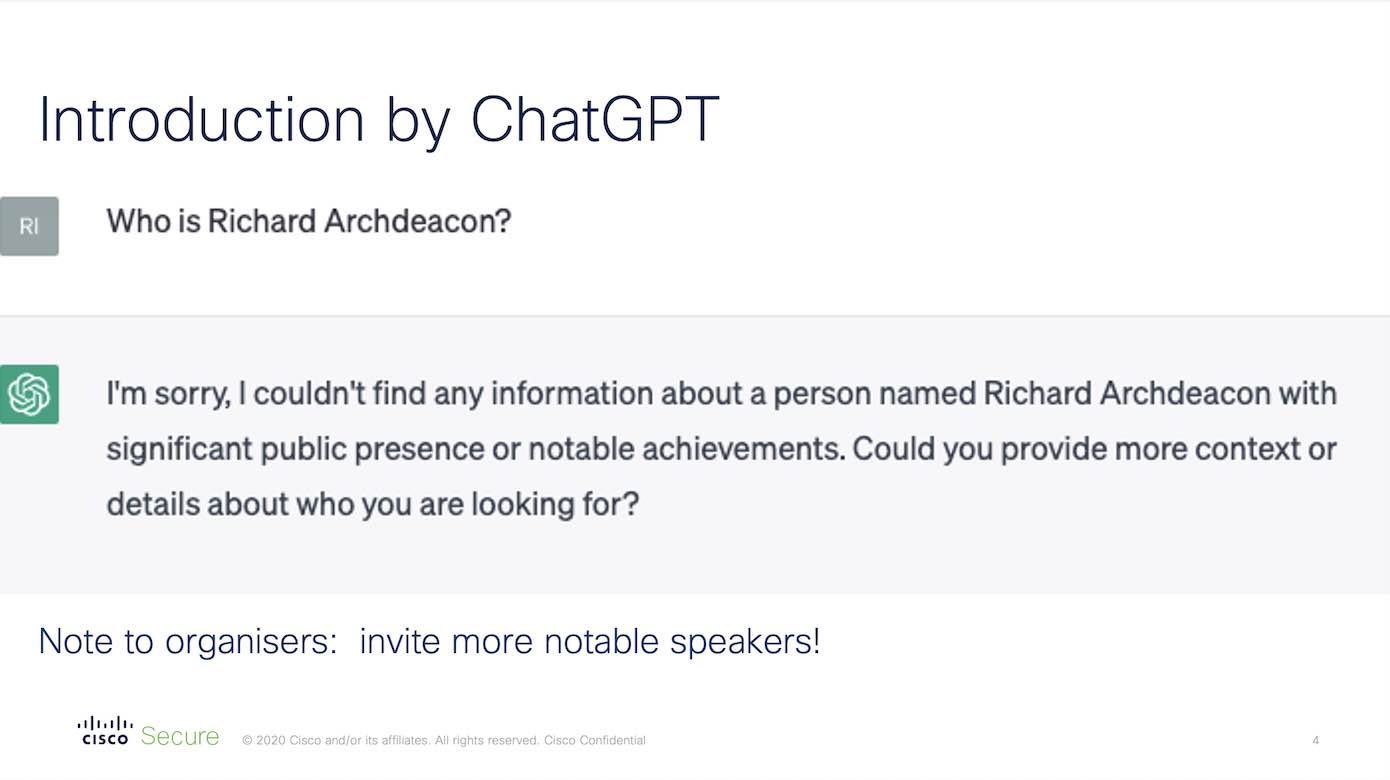

“Who is Richard Archdeacon?”

To some it’s generative AI, to others it’s Machine Learning. Perhaps the most useful description for the AI solutions is simply: “the practical application of clever maths.”

In other words, we need to remind ourselves that these tools are not magic. They’re simply the interaction of algorithms with a defined data set.

To illustrate the point and prove my dedication to keeping fellow CISOs fully informed, I decided to test the new capability by asking an AI tool to generate an introductory slide for a presentation that I was giving at a conference.

My attempt to cut corners came out with a result that was none too complimentary. But who am I to disagree? It was certainly appreciated by the audience!

This experiment did make me think. The information used to generate my introduction was taken from a set of data that was static at that time. But what if it could be manipulated? Would it be possible for me to “poison” selected online platforms with fake content about Nobel prizes, international sporting achievements and previous roles as a leading Hollywood actor? It’s an almost textbook illustration of the well-known GIGO issue and it begs an important question for the proponents of AI. How do we know that the data trawled by these apps has not been poisoned — especially since such an attack would be relatively simple to implement? (With that in mind, expect to see an increasing recognition of the benefits that can be gained through vaccinating datasets against adversarial attacks.)

Or, as we have heard already, what if confidential information had been accidentally shared and was now in the public domain? This could pose an organization with any set of business risks from exposing vulnerabilities to compromising IP claims.

That is why there is now a growing body of commentary recognizing how the rewards of generative AI are counterbalanced by some very real security risks.

For the CISO there should be an inside/outside view. What solutions are being developed internally and what controls should be put in place? What solutions are being introduced into the organization and how do we make sure they’re doing the job?

There are also ethical questions to be considered, emphasizing the importance of effective data governance structures, policies and procedures.

A business-driven approach

The capabilities of a new technology and its potential for future development are key considerations that guide investment decisions for any business. Equally important for the CISO is the necessity to understand the risks a technology may pose for data governance, privacy and security.

The understanding of whether AI is the best tool for a particular job or not will depend upon whether it will support productivity and build business resilience by focusing on the practical priorities a CISO faces.

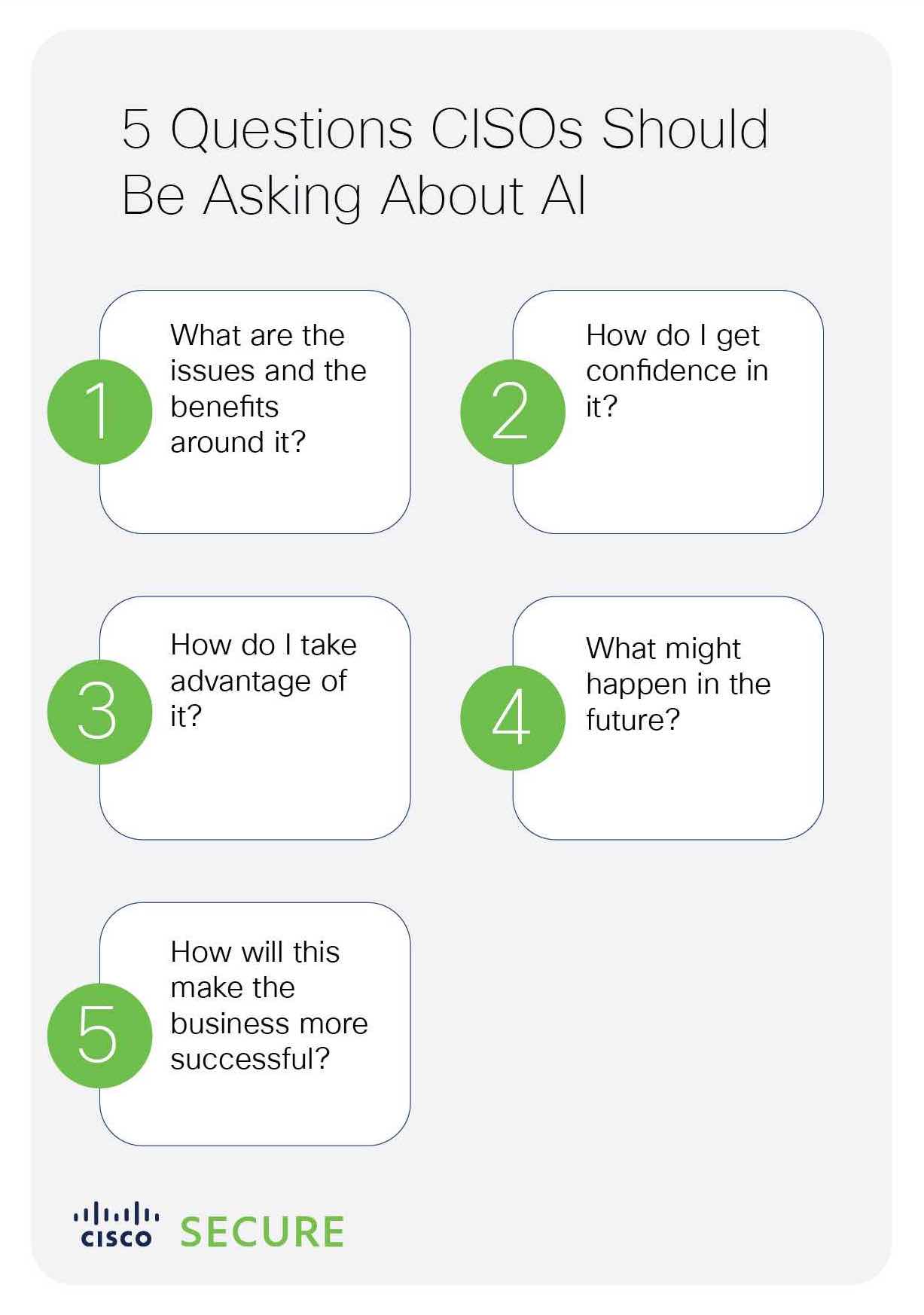

So, just like any other technology investment, our initial questions should be about the operational capability of AI:

What are the issues and the benefits around it?

How do I get confidence in it?

How do I take advantage of it?

What might happen in the future?

How will this make the business more successful?

When looking further into any such solutions, the risks and opportunities need to be understood. In short: will they introduce new weaknesses or vulnerabilities?

With these considerations in mind, I see a series of assessments being undertaken which may well include an extension of the current approach to third-party solutions.

More compliance for the CISO to worry about!

AI and governance

The first step for a CISO is to look for a clear list of principles to ensure that ethical data governance is built in from the start when AI solutions are implemented. Cisco has a well-established set covering:

Guidance and Oversight

Controls

Incident Management

Industry Leadership

External Engagement

So, a sound basis for developing trust in any solution. For example, knowing that there is governance in place, that privacy and unintended bias are recognized and addressed, and that incidents are managed mitigates any adoption risk. Privacy will be a key issue where generative AI apps store information from user inputs and use it to generate additional content.

When mentioning the Cisco principles and framework to CISOs there has been a mixed reaction from “That is impressive.” to “May I get a copy please?” Albeit purely anecdotal feedback, it shows that this is an area of interest to them, there is a need to fill a gap and a recognition that Cisco has already started to do so.

Regulators and legislators are already reviewing new controls in most countries including the US, EU and the UK while generative AI has been banned in some countries including Italy, France and India. These developments need to be watched as it will be another area for CISOs to monitor.

Using AI (or clever maths) in practice

To understand how to use AI (or clever maths) in a practical sense, the way Cisco Duo has developed its Trust Monitor solution provides a case study. Trust Monitor creates an internal data set using AI to learn what looks like secure behaviours and then (automatically) alerts administrators when something looks risky.

"We created a data governance team to look at every use case in which we wanted to apply AI techniques. This team included all stakeholders. Not only Engineers, but we also included perspectives for legal, privacy, and ethics concerns. In that way, we could decide whether this was the right solution for the use case and, if so, we ensured we had a full understanding of how we could apply our principles." - Joe Duggan, Product Manager at Cisco Duo

This ensures a development approach which works to protect the security of users with systems that anonymize and obfuscate personal user details without impacting functionality.

In addition, policies around secure by design, data handling, retention and deletion are in place from the start.

These principles are also top of mind as Cisco Duo integrates with an increasing number of solutions across the wider Cisco Security portfolio. So, a principled approach is embedded into the whole engineering process, increasing the opportunity to protect users whilst reducing any risk.

Asking about this type of approach may be one way in which the CISO can assess the risk of the solution being provided.

A sense of perspective

AI is going to continue making an impact, but it will never be the whole answer to every question for the business. In those situations where it is useful, we will need to have a view on how any associated risks are managed.

For security specialists, AI will remain a genuinely useful tool, automating many mundane tasks and doing a lot of heavy lifting. There is an increasing body of work looking at the security issues of LLMs and AI which are developing use cases and potential security issues. Without the right controls in place, however, AI won’t always be the best answer. It is important to be able to demonstrate that the use of any AI is based on sound principles to build confidence and acceptance in its use.

In fact, as I’ve discovered myself, it might not even be the best tool for writing an introduction to a presentation.

Knowledge may be the wing wherewith we fly to heaven – but we better make sure it is secure.

Further Reading

Editor's Note: This blog was updated on 8/31/23 to reflect new developments in AI technology and at Cisco.