How to Monitor GitHub for Secrets

00. Introduction

Publishing sensitive information to version control systems like GitHub is a common risk for organizations. There have been documented cases of developers accidentally publishing secrets such as API keys only to have them scraped and used by attackers moments later.

As awareness of the problem increases, we have seen a rise in tools and services designed to search repositories for secrets on an ad-hoc basis, as well as tools that integrate within a continuous integration (CI) pipeline to prevent secrets from being committed in the first place.

In this article, we'll explore the extent of this problem, and show multiple ways to monitor GitHub for secrets depending on your scenario. We're also open-sourcing a tool we wrote called secret-bridge to help your organization detect secrets as part of a CI pipeline, even if you don't have control over the repositories yourself.

01. Measuring the Problem

Before we talk about how to detect secrets, it’s important to understand the scope of the problem. In a paper titled How Bad Can It Git? researchers from North Carolina State University and Cisco examined accidental leakage of authentication secrets (such as API keys or private keys) across GitHub. This study is unique in that it is the first exploration into the scale of the problem that otherwise has largely been reported anecdotally.

Their approach used targeted searches using the GitHub API to provide near real-time secret detection, and analyzed weekly snapshots of GitHub data made available on Google BigQuery in order to find secret leakage in over 100,000 repositories with thousands more secrets committed daily.

Furthermore, they found that their search method’s “median time to discovery was 20 seconds, with times ranging from half a second to over 4 minutes, and no discernible impact from time-of-day.”

In short: secrets are committed often, and are discoverable very quickly, likely before the affected parties have time to react. Attackers can, and have, used similar techniques to identify secrets and use them for malicious purposes. But fortunately for us, these and other techniques can be used by organizations to monitor their own repositories for secrets being committed.

02. How to Find and Prevent Secrets on GitHub

Depending on the scenario, it’s possible to receive near real-time notifications that secrets have been published to a repository or, even better, prevent the secrets from being published in the first case.

You Want to Study Historical GitHub Data

Before discussing how to find and prevent new secrets from being committed, it helps to know where to find historical GitHub data. This data is useful for studying issues like secret leakage across all of GitHub over time.

There are a few academic services that gather this data and make it available. Some, like GHTorrent and GH Archive make data available as snapshots for offline processing. There are also datasets available on search platforms such as Google BigQuery (from GH Archive, GHTorrent, and even GitHub itself) that allow queries to be executed against historical data.

While historical data is incredibly useful for measuring the scale of the problem or identifying trends over time, most organizations will be more interested in how to monitor for or prevent new secrets from being committed in the future. There are a few methods to accomplish this, depending on the scenario.

You Have Full Control Over the Development Environment

Git has the ability to create hooks, which allow you to run a script at various points during the Git workflow that determines whether the workflow should continue. If you have control over the development environment used by committers, you can create a pre-commit hook that checks for sensitive information before allowing the commit to occur. This is the preferred way to prevent secrets from ever being committed to a repository.

While you can use any secret scanning tool such as truffleHog or gitleaks in a pre-commit hook, other tools such as detect-secrets from Yelp or git-secrets from Amazon Web Services make this step even easier by handling the installation for you.

Hooks are local to the development machine, and are not committed to the repository. You can emulate similar behavior by committing hooks to a separate folder in the repository and including a script to copy or link the hooks into .git, but this is not an automatic process. Tools like pre-commit make this process a bit easier.

If you’re using GitHub Enterprise, you have the option of using pre-receive hooks to run secret scanning tools before accepting the commit into the remote repository. Gitlab offers similar hooks, including a predefined blacklist designed to catch secrets being committed without the need for a separate scanning tool. Even though these checks happen server-side, a commit that fails pre-receive hooks will not be reflected in Git commit history.

You Have Control of the Repository or GitHub Organization

GitHub supports webhooks which can be triggered for various events in a repository or organization. The push event will tell you when new commits are pushed to a repository, and you can use these to trigger secret scanning tools.

Receiving webhooks will not prevent secrets from being committed to the upstream repository (as opposed to pre-commit or pre-receive hooks), but you will be notified immediately when commits are made and can use this to rapidly respond to found secrets. This could mean automatic revocation, rollback of repository state, or alerting based on the results of the scan.

It should be noted that removing secrets from a Git repository after they’ve been committed is a painful process. You must assume that any secret that does get committed is compromised, and should be invalidated if at all possible, regardless of the time it takes to scrub the secret from the repository. GitHub’s article on removing sensitive data from a repository demonstrates the process required to scrub sensitive information from GitHub, including all the ways it can go wrong. Git is very good at its job of keeping track of all historic repository states, so it is correspondingly difficult to “change history” by removing commit contents.

You’re a Service Provider Wanting to Keep Customers Safe

It’s been documented that service providers such as Microsoft monitor GitHub for API keys accidentally committed. GitHub recently made this same capability accessible to other service providers through their Token Scanning service.

In this scenario, you as a service provider provide GitHub regular expressions that match your service’s tokens and they alert you via webhook when tokens are found. This frees you from the need to monitor specific repositories as GitHub will monitor all commits for your service’s tokens. By leveraging this product, you can monitor for your own tokens being uploaded and revoke them before they can be abused.

You Control Neither the Repository nor the Developer Machines

All of the previous methods of secret detection and prevention require some level of control over the repository or organization. However, if you cannot use pre-commit hooks or receive webhooks, you still have options. GitHub exposes two APIs, the Events and Search APIs, which provide near-real-time information about commits, and can be scoped on a repository, organization, or user basis.

While the Events and Search APIs fill an important gap when it comes to secret monitoring, there are downsides to consider with both approaches. The Search API provides search results for all public repositories and allows results to be sorted by the time they were indexed, making it possible to identify new secrets a short time after they were committed. However, as we detail in the Limitations of Existing Methods section below, the Search API is limited in that it can only search for hardcoded values, as opposed to regular expressions or more advanced searches. If the secrets you’re searching for include a hardcoded value (e.g. a prefix), then tools like GitGot are available to handle the searching for you.

The Events API provides a firehose of all public events at /events. This is useful if you want to watch for certain events happening across all of GitHub, though as we detail in the What Didn’t Work section, this approach isn’t feasible due to request rate limits. Instead, watching the events for a given repository, organization, or user solves our needs for secret detection

Once we have the events, we need a way to execute our secret detection tools with the correct context. We recognized a gap in existing tooling here and created secret-bridge as a result.

03. Making Things Easier with Secret-bridge

Without control of developer machines or the CI pipeline, you can still get near-real-time information on commits by polling the GitHub Events API or receiving webhook events from GitHub. Unfortunately, most secret detection tools are built with ad-hoc scans in mind, and don’t provide functionality that allows them to be used with real-time data.

To bridge this gap, we’ve built secret-bridge, a tool to automate the execution of secret detection tools based on repository events.

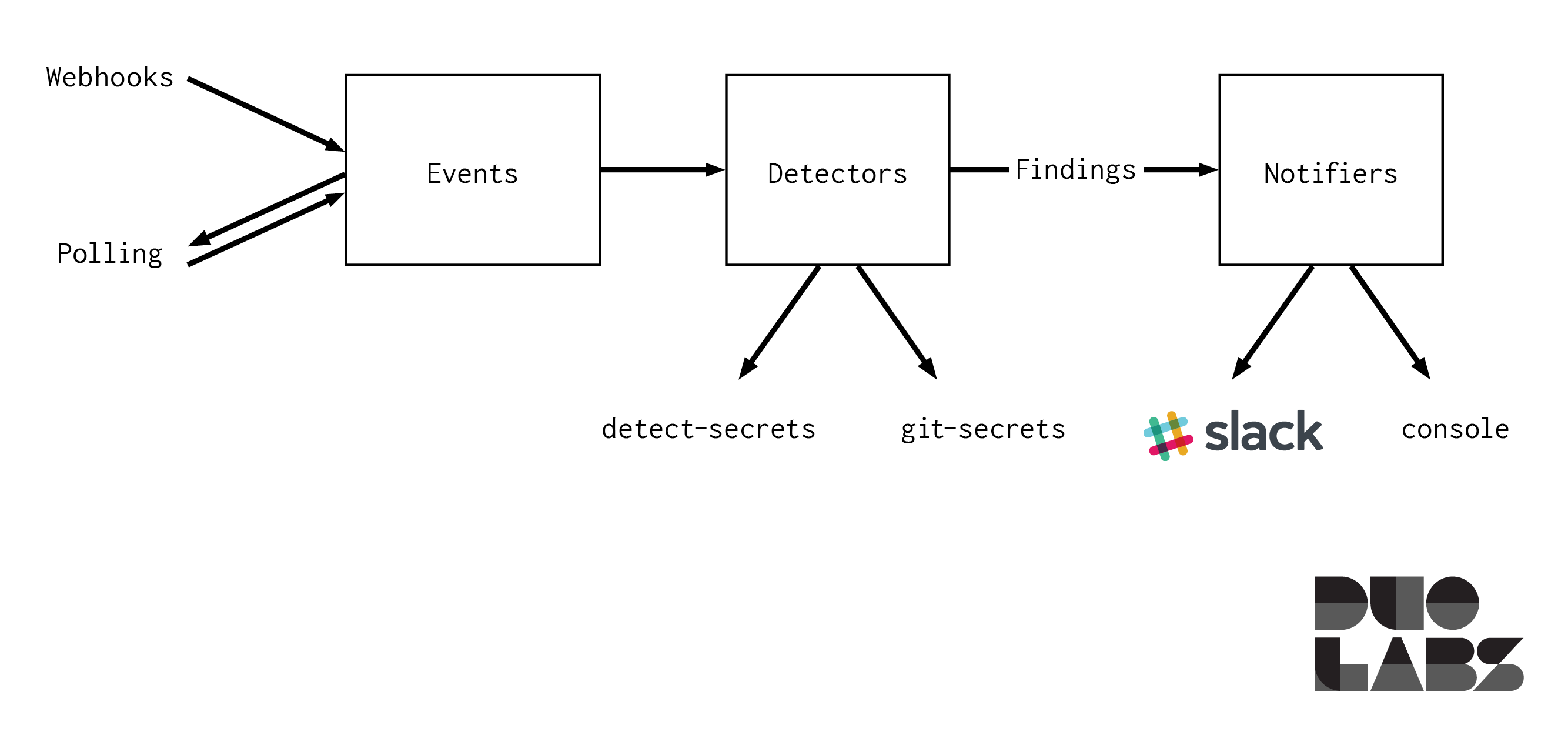

With the above scenario (no control or limited control of repositories and developer machines) in mind, secret-bridge allows secret discovery via webhooks and real-time polling of the Events API. Rather than reinventing the wheel, we gather events that indicate a new commit was pushed, and then route the commit contents to one or more detectors (such as git-secrets). This allows us to leverage the strength of existing secret scanning tools to operate on real-time commit contents. The outputs of these detectors are then routed to configurable notifiers, which could send a Slack message or an email, page your security team, or simply write to a logfile. Notifiers and detectors can be mixed and matched.

Event polling is excellent when you want to search across a range of users, repositories, and organizations, but requires requests to the GitHub API on a regular basis, and can be subject to rate limiting, meaning event polling is not quite real time. Webhooks deliver events like new pushes to a repository in real time, but require that you have the privileges on your monitored repositories to set them up. Secret-bridge allows you to use either approach interchangeably.

Secret-bridge is also extensible. We’ve built wrappers around two existing secret detection tools, and new detectors and notifiers can be added with minimal effort.

04. Conclusion

When it comes to mitigating the impact of accidentally committing secrets to a service provider like Github, detection is the first step. Knowing what options are available depending on your scenario gives a head start towards developing continuous monitoring.

We’re excited about expanding these existing capabilities with secret-bridge, and we hope that the community is able to use the tool to match their own needs.

05. Additional Information

Limitations of Existing Methods

The techniques described in How Bad Can It Git? worked very well, but relied on the ability to construct search strings that could be submitted to the GitHub Search API to find files possibly containing secret data. This works great for API tokens that have common prefixes, or private keys that have a well-defined serialization format, but secrets don’t always have these markers -- sometimes they are hex strings embedded in a poorly-named variable deep in a config file. Secrets like these are not easy to search for.

Tools like git-secrets overcome this by using heuristics such as “Base64 string with high Shannon entropy,” but are generally designed to work as part of the development and CI workflows, which is the key observation that led us to build secret-bridge.

What Didn’t Work

In the course of our research, we evaluated the possibility of using the GitHub Events API to look at every new commit across all of GitHub in real-time. Specifically, we wanted to determine if we would be able to use the API to gather every public event while staying within the requested rate limits.

To do this, we wrote a simple script that worked like this:

- Get the first page of events and record the ID of the newest event

- Wait 10 seconds (to simulate retrieving pages roughly once per second)

- Get the last page of events and record the ID of the oldest event

- Compare the IDs to determine if the oldest ID on the last page is newer than the ID we first recorded.

- If it is, then more than 10 pages of events have been generated within 10 seconds, causing us to miss events

- If it’s not, then, assuming we could iterate through each page within 10 seconds, we should be able to capture all events

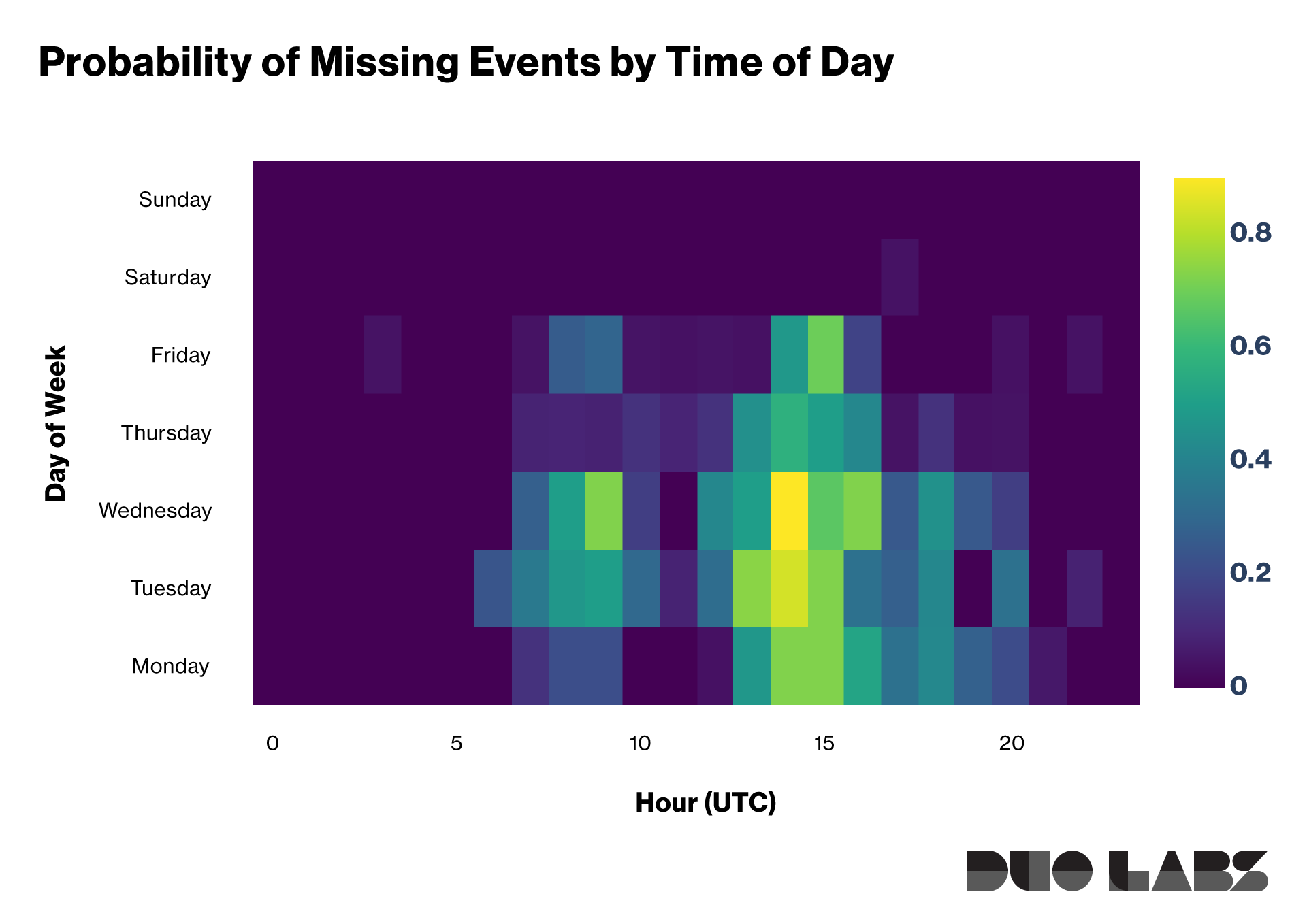

We ran this experiment every 5 minutes for roughly two weeks. During this time, we found that even iterating through pages in 10 seconds we would miss events as much as 80% of the time during peak hours.

The following chart shows a heatmap of how often we were able to capture all events during our polling. The intensity of the heatmap indicates how often we would miss events for a given hour. For example, on Wednesday at 14:00UTC, there is an 80% chance that one or more events would be missed by polling the Events stream. On Sunday at 00:00UTC, it is likely that one would be able to capture all events, which is expected since there is likely less activity happening during that time.

While for this short experiment we used a 10-second delay between polls (executing the polls every 5 minutes), the actual restriction is even longer. According to the GitHub’s API usage guidelines, the X-Poll-Interval response header specifies a requested number of seconds to wait between requests, which may increase during times of high server load. During our sampling, we recorded the value of this header to be 60 seconds, so it’s safe to say that with this approach, we wouldn’t be able to keep up with the volume of events generated by the GitHub community. Some implementations, like GHArchive at the time of this writing, request pages every 0.75 seconds and appear to be successful at capturing most events. However, since this isn’t an approach approved by GitHub, we don’t recommend it.